Azure Machine Learning supports a range of data sources – primarily cloud data sources. One of the very interesting data sources is Hive that support the capability so write SQL-like queries against HDInsight clusters. In this post, I will show how you can configure the Reader component in an Azure ML experiment.

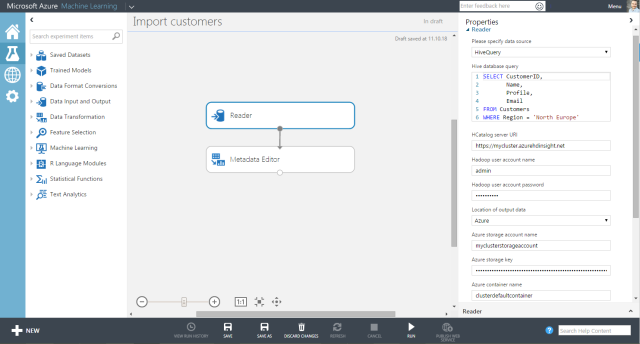

First, create a new experiment in Azure ML and drag a ‘Reader’ component into the canvas. Then drag a ‘Metadata editor’ component into the canvas too and connect the output of the reader to the input of the metadata editor. Your experiment should look like this.

I will now walk you through each of the settings in the reader component.

Data Source

Here you select Hive Query that enables you to specify a Hive query using the HiveQL language

Hive Database Query

Specify your Hive query here. (See HiveQL language reference here)

HCatalog server URI

HCatalog is a table and storage management layer for Hadoop. HCatalog exposes a RestAPI named WebHCat where Hive queries can be queued and job status monitored. WebHCat was previously known as Templeton, so queries against WebHCat are based on the URI http://clustername.azurehdinsight.net/templeton/v1/xxx.

In this field, you should type in the URI of the HDInsight cluster.

Hadoop user account name

A valid user account to the HDInsight cluster, like ‘admin’.

Hadoop user account password

A valid password associated with the Hadoop user account

Location of output data

When a Hive query has executed with success, the result is stored. Based on your configuration, the result can be stored either in Azure Blob storage or in HDFS. In this example, I’m using Azure Blob storage.

Azure storage account name

Type in the name of the storage account used by the HDInsight Cluster. If you are not sure of this setting, then look at the Dashboard page on the HDInsight cluster in the Azure Management portal. The storage account is listed under Linked resources

Azure storage key

The storage key for the Azure Blob Storage. This can be found under ‘Manage Access Keys’ on the storage account in Azure Management portal

Azure container name

Type in the name of the default container for the HDInsight cluster. The container is part of the Azure storage account.

If you want to rename the fields coming from the Hive query use the metadata editor component.

That’s it, you are ready to go and make your HDInsight cluster crunch some data and load the result into Azure ML